360 degree camera and creating HDR 360 Spherical maps for IBL use

A fellow teacher at the Studio Arts program, hooked me up with this latest tidbit of an easy way of shooting and creating your own Spherical HDR Maps. Kodak has a camera out called Pixpro SP360 Action Cam. It’s a pretty inexpensive camera that shoots photos and video at 360 Degrees.

It can shoot HD Video, capture 16mp sensor, WIFI enabled, and has simple apps to control via your Smart Phone. There is a whole host of things you can do with the camera, check out here.

It’s easy to setup and use via the phone app. Sync with WIFI between camera and phone. You basically choose between shooting Video or Photo and then choose what type of format you want to record your image in; Dome, Panorama, Front, Segment, Ring. I shot in Dome mode.

The app is very intuitive. Click, drag, and toggle basically. The range of app linked to your phone is a distance of 65 feet. It has an interactive display of what you are shooting and how it will look in whatever mode you shoot in. Click “EV” and you can bracket your exposures.

Slide the Exposure left or right and click to take photo to get your bracketing.

Now you just assemble the photos in Photoshop. I did mine as a Equirectangular format for the Spherical Panorama. There’s a couple ways to do this.

What I did is import the files to Photoshop by “File -Automate- Merge HDR PRo”. Navigate to your photos and batch install them. Make sure “Attempt to Automatically Align Source Images” is off. This will pixel shift your image file size.

Click Ok. This will bring up HDR Pro dialog box.

Bottom left showing your bracketed exposures.

In HDR Pro, change to 32 bit and depending on the version of Photoshop, turn off “Complete Toning in Adobe Camera Raw”.

Now you have an image with full dynamic range. You can create an exposure node on the file and test it if you’d like. You should be able to dial in and out the brightest and darkest of image and still see either sun glow or shadow details.

You could also resize your image to gain a little image depth, so you can go Image – Image Size and change percent for whole image to 200% to double it up.

Change the file to prepare it for an equirectangular image, we need to be at a 2:1 ratio (360 degrees wide to 180 degrees high). Go Filter – Distort – Polar Coordinates.

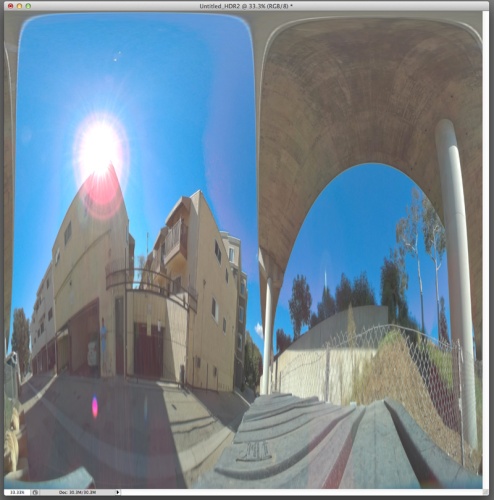

Switch to “Polar to Rectangular”. This is what your image will look like now.

**Something to note depending on what version of Photoshop you have you may need to go about this in a different order. In earlier versions of PS when in 32 bit you may not have the Filter icon active. So if this is the case you’ll do the polar coordinate step first on all of your images before hand and then re-import them with the “Merge to HDR Pro step”**

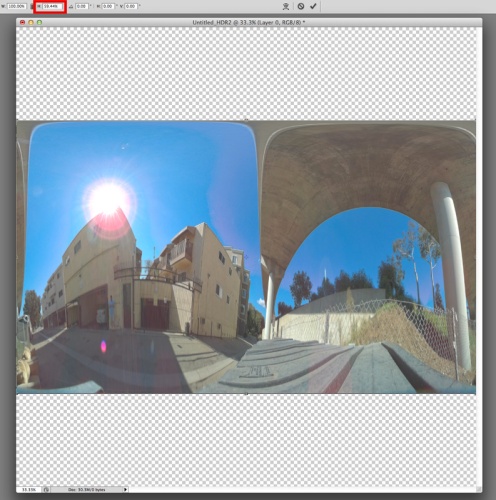

The image needs a little resize due to your lens height. We don’t have a full 360 coverage in height (y). The camera lens is an 214 degree ultra wide. We’ll do a quick fix on this. So 214 will be divided by 360 which is 59.44 percent. You will transform your image height to 59.44 percent. Command + T and change height to 59.44.

Using “Snap” under “View-Snap to-Document Bounds” you can move up the picture to top of frame. Now to finish the convert to 2:1, do a covert Image-Image Size, make width 200% (or make height 50%).

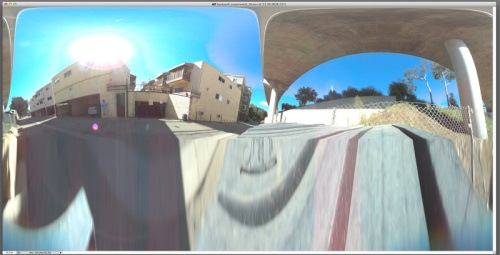

To fill in bottom of image, use the marquee tool drag rectangle across bottom and copy paste the element, then “control + t” and drag bottom manipulator handle down to bottom of document to stretch the pixels.

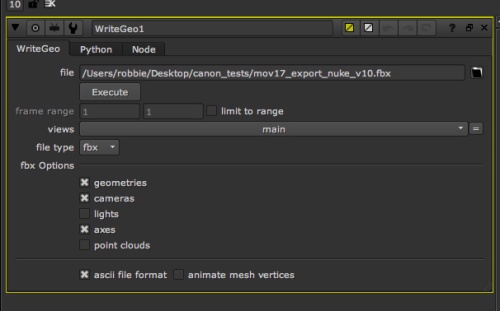

Save out your final image as an EXR format file. Final image ready for use in favorite 3d software package as an Image Based Lighting (IBL).

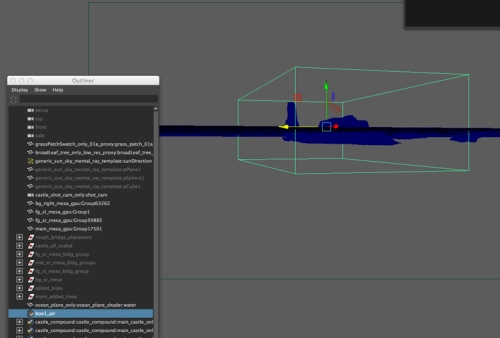

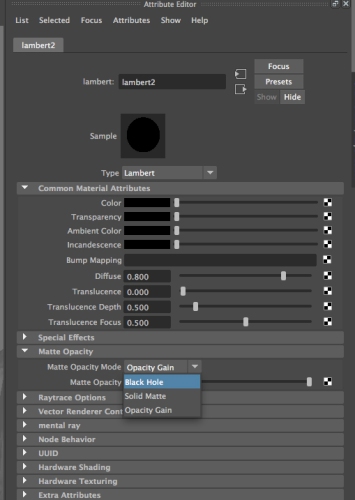

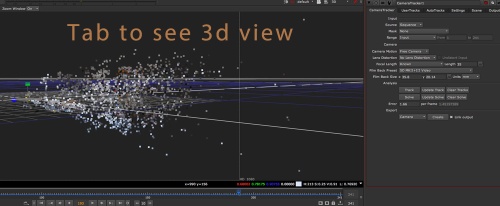

IBL in Maya for example.

Here is a good test render using this map on some simple geometry. This scene is only lit using the IBL map in the scene. Behind the back striped wall the trees and sky are from a photo I took.

Now there are higher resolution ways to generate an HDR Spherical Map but really without stitching and all kinds of additional steps this is a pretty quick and inexpensive way to gather up a reference library of HDR Panoramas for IBL usage.